Generative AI: New Cost Center for Software Companies

Generative AI comes at a cost. Inferences are expensive. Solution development is also much more costly.

Consider this scenario:

You’re an aftermarket developer and consultant for a no-code database platform.

You have an idea that requires generative AI; the idea could open a new opportunity for the no-code platform to provide solutions not possible before the advent of integrated AI.

You begin to frame a prototype and eventually start building and testing AI prompts. You know prompts will be a big challenge, but you believe they can meet or exceed the requirements and produce deterministic results.

Through your extensive R&D process, you blow through a month’s worth of AI credits in half a day, and you still have many days of prompt development and testing ahead of you.

You must buy multiple inference credit upgrades. The financial investment rises, and you still aren’t confident your clever approach will work, let alone be profitable.

Unbounded generative AI costs create a disincentive that most developers will feel as LLM costs rise during application development phases.

GitHub Copilot has reportedly been costing Microsoft up to $80 per user per month in some cases as the company struggles to make its AI assistant turn a profit.

According to a Wall Street Journal report, the figures reportedly come from an unnamed individual familiar with the company, who noted that the Microsoft-owned platform was losing an average of $20 per user per month in the first few months of 2023.

There are many indications that GPUs are very scarce, and demand is increasing at rates that put a lot of pressure to secure LLM access.

I keep asking myself:

Is generative AI on the edge of a reckoning that will cause rampant adoption to pull back?

Ironically, non-believers first claimed hallucinations or crappy outputs would cap AI adoption. AI costs may be the limiter that puts a sizable dent in the adoption trajectory.

One thing is sure in this little cesspool of AI development uncertainty - aftermarket developers and consultants may not discover that next great AI-centric feature that causes users to want to pay future AI fees—UNLESS disincentives to AI solution development are removed.

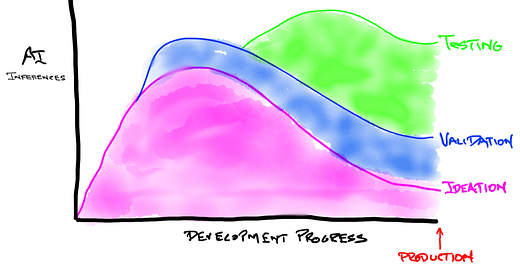

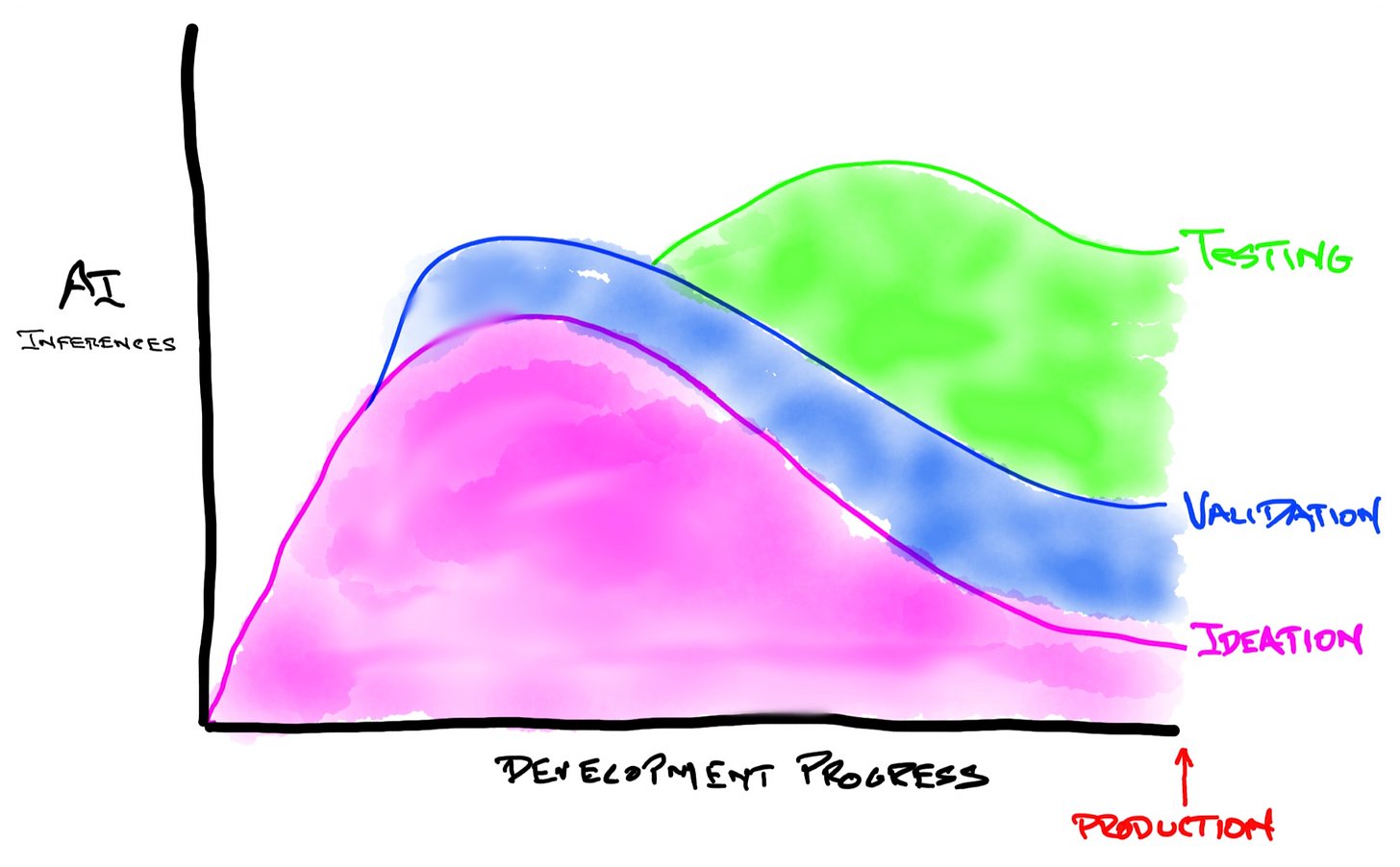

This hastily prepared diagram shows three layers of AI costs burdened by after-market developers and enterprise builders. Given the intensity needed to push a generative AI solution into production, these are not insignificant layers. As you build AI solutions that include inference costs during development and testing, such costs serve as a tax on innovation. This is a challenge if your goal is broad adoption of the platform and its AI features that depend on builders to shape the tool to meet specific fitness objectives.

I [personally] have many exciting ideas and possible use cases that depend on various platform AI features. Still, if I must pay for the platform and, [additionally], the hyper-consumption of inferences required to create something far beyond usual and customary volumes of AI use, I won’t use that platform to do it. I’ll seek another path that probably won’t include the platform that charges retail prices for using LLMs in an R&D process.

Platform providers must demonstrate why AI adoption and upgrades are in the customer’s best interest if they want their users to pay a premium for AI inferences. The value proposition pre-AI was easy to communicate. Now you have a tiger by the tail … and that tiger has teeth.

A distributed network of value-adders has never been more critical in the

history of software tools.

This new breed of developers and aftermarket providers could be described as hyper-partners - entities skilled in your product AND generative AI working as an extension of your development team. The future of generative AI has fractured the very essence of a development team. No internal team can cover all AI possibilities; this new horizon of opportunities must be distributed and scalable. And compensation may be required to sustain attraction.

But that requires a slightly different engineering and financial model that places incentives in a context that attracts scarce external resources to create abundant solutions and templates.

This is not a well-understood or broadly discussed trend, but I personally have been invited to work for three no-code platform companies in the past ten days. All of them are desperate to shore up their AI templates, approaches, and documentation, all while adding additional requirements guidance to their engineering teams.