Many who discover the significant advantages of building systems based on OpenAI example experiences such as ChatGPT tend to assume that they should build their specific chatbots using prompts – and ONLY prompts – engineered to elicit specific behaviours. While possible in many cases, there are at least three issues with this approach:

OpenAI interfaces are intended to be broad examples, and they could change, possibly upsetting the performance or operation of your own solution.

The costs of sustaining conversation awareness could be financially impractical unless you craft the solution with a mix of OpenAI APIs and other usual and customary technologies, such as embedding vectors, real-time data handlers, script automation, etc.

There are likely additional requirements that you haven’t yet encountered. Locking in your technical approach to a prompt-engineered strategy has a ceiling that is probably too low to address other challenges that are not explained in your vision.

Sidebar: #3, is advice I give to my clients concerning the development of AI systems; always start with a requirements document even if it’s just a list of bullet points.

My Approach

I created this example FAQ system using only a Google Spreadsheet and various Apps Script that create vectors for each Q&A interaction. The app process is extremely simple:

Vectorize the new user question.

Find the top three candidates in the vector store (i.e., the spreadsheet) using DOT product similarity comparisons.

Uses the content from the top three hits to create a completion prompt that asks GPT to generate the best final answer for the customer.

The chat application itself can be hosted in a web site, or as a sidebar app in sheets or docs. In this example I used Firebase hosting.

NOTE: This app was created to demonstrate the underlying mechanics of embeddings and similarity search to create a functional chatbot for Q&A. If you’d prefer to just make some stuff work, you might want to look at CustomGPT; it insulates you from a lot of the AI technology while also making it really easy to build and host new “models” comprised of dozens of business documents and formats.

Maintaining Conversational Memory

While this is a simple Q&A process, my approach also maintains a conversational context by storing (in client memory) a session object. This object contains the user’s questions, the answers we provide, and the relevant vectors. This allows me to monitor the conversation by generating an ongoing summary of the interactions as well as a list of keywords and entities that appear in the conversation as it unfolds. These additional assets about the conversation can be created and updated with GPT itself.

For my FAQ requirements with CyberLandr, the session object is not needed; the FAQ corpus is extremely narrow making it unnecessary to inject reminders into subsequent prompts. However, it is required for analytics and improving the FAQ content corpus (all customer interactions are tracked to improve the app). This approach may be used to provide a chat service with an ongoing memory of what has transpired.

Other tools such as LangChain and AutoGPT may also be helpful in building better chatbot systems to support conversational continuity.

Under the Covers

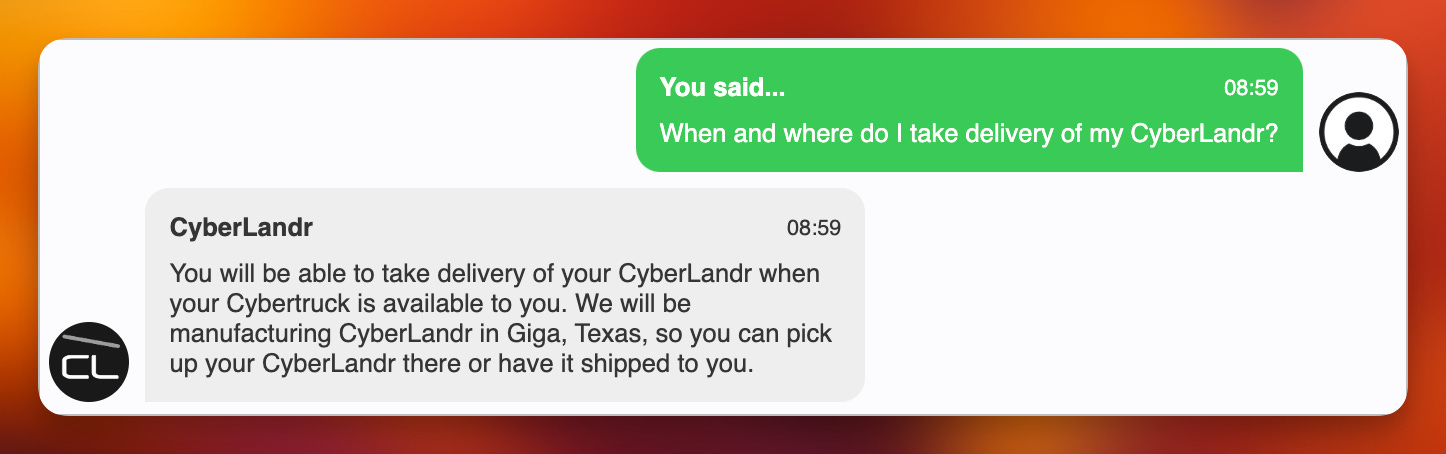

Given this example question:

When and where do I take delivery of my CyberLandr?

This completion prompt is crafted:

Answer the Question using the best response and rewrite the answer to be more natural.

Question: Do I have to take delivery of CyberLandr before my Cybertruck is available to me?

Answer: No. One of the nice things about our reservation system is that it matches the Tesla approach. You are free to complete your CyberLandr purchase when you have your Cybertruck to install it into. With all of the unknowns concerning Cybertruck's production timeline, we are making it as flexible as possible for truck buyers to marry CyberLandr with their vehicle as soon as it comes off Tesla's production line. This is why we will be manufacturing CyberLandr in Giga, Texas.

Question: When will CyberLandr become available?

Answer: We anticipate CyberLandr will be ready for delivery approximately when Tesla begins producing and shipping Cybertruck to its list of reserved purchases.

Question: When will the CyberLandr prototype be completed?

Answer: There will be many prototypes before we ship. We will share info about some of those with the CyberLandr community (reservation holders). And we will unveil the final production prototype publicly before production begins.

Question: When and where do I take delivery of my CyberLandr?

Answer: Resulting in this response:

Let’s recap this process with some helpful code and a link to the spreadsheet project.

Keep reading with a 7-day free trial

Subscribe to Impertinent to keep reading this post and get 7 days of free access to the full post archives.